Introduction

Backend engineers increasingly work with data-heavy systems, whether they support analytics pipelines, API-driven applications, internal dashboards, or large-scale ETL processes. Although Pandas is traditionally seen as a tool for data scientists, it is equally valuable for backend developers who need to process logs, validate datasets, clean incoming data, or generate summaries. In this guide, you will learn why Pandas matters for backend engineering, how to use its core features effectively, and how it integrates seamlessly into modern backend workflows. These techniques will help you build more robust data processing systems and prototype solutions faster.

Why Pandas Is Useful for Backend Engineers

Backend systems frequently handle tasks that require structured data analysis. Pandas helps engineers complete these tasks efficiently by providing intuitive and high-performance APIs. Backend developers can clean and validate incoming application data before persistence. They can parse logs and transform records at scale. Preparing metrics for analytics dashboards becomes straightforward. Aggregating large datasets efficiently saves development time. Prototyping ETL workflows before building production pipelines reduces errors. Generating insights to guide backend architecture decisions becomes faster.

Because Pandas operates on in-memory data structures, it also allows quick experimentation before implementing production-grade solutions. The library’s vectorized operations execute significantly faster than Python loops, making it practical for processing millions of rows during development.

Core Pandas Concepts Backend Engineers Should Know

Before applying Pandas to backend workloads, you should understand a few foundational concepts that power its API.

Series and DataFrames

A Series represents a one-dimensional labeled array, while a DataFrame represents a two-dimensional table of rows and columns. These objects form the basis of nearly all Pandas operations.

import pandas as pd

import numpy as np

# Creating a DataFrame from a dictionary

df = pd.DataFrame({

'user_id': [1, 2, 3, 4, 5],

'email': ['alice@example.com', 'bob@example.com', None, 'dave@example.com', 'eve@example.com'],

'status': ['active', 'pending', 'disabled', 'active', 'active'],

'created_at': pd.to_datetime(['2024-01-15', '2024-02-20', '2024-03-10', '2024-04-05', '2024-05-12']),

'requests': [150, 23, 0, 89, 312]

})

# Accessing a Series (single column)

user_statuses = df['status']

# Basic DataFrame info

print(df.info()) # Column types and non-null counts

print(df.describe()) # Statistical summary of numeric columnsIndexing and Filtering

Filtering rows is essential for analyzing subsets of data. Pandas supports multiple filtering approaches.

# Boolean filtering

active_users = df[df['status'] == 'active']

# Multiple conditions

high_activity_active = df[(df['status'] == 'active') & (df['requests'] > 100)]

# Using query() for readable filters

result = df.query('status == "active" and requests > 50')

# Select specific columns

user_emails = df[['user_id', 'email']]

# Using loc for label-based selection

recent_users = df.loc[df['created_at'] > '2024-03-01', ['user_id', 'email', 'status']]

# Using iloc for position-based selection

first_three = df.iloc[:3]Reading Data from Files

Pandas supports reading CSV, JSON, SQL queries, Parquet files, Excel, and more.

# CSV files

df = pd.read_csv('logs.csv')

# JSON files (including nested structures)

df = pd.read_json('data.json')

df = pd.read_json('nested.json', orient='records')

# Parquet files (efficient for large datasets)

df = pd.read_parquet('data.parquet')

# SQL queries directly into DataFrames

from sqlalchemy import create_engine

engine = create_engine('postgresql://user:pass@localhost/db')

df = pd.read_sql('SELECT * FROM users WHERE status = %s', engine, params=['active'])

# Excel files

df = pd.read_excel('report.xlsx', sheet_name='Users')These capabilities make Pandas an excellent tool for inspecting backend data quickly.

Applying Pandas to Backend Workflows

Now let’s explore how Pandas directly supports tasks backend engineers perform routinely.

Log Parsing and Analysis

Backend systems generate logs that often require filtering or aggregation. Pandas makes this process extremely efficient.

import pandas as pd

from datetime import datetime, timedelta

# Load server logs

logs = pd.read_csv('server_logs.csv', parse_dates=['timestamp'])

# Filter errors from the last 24 hours

cutoff = datetime.now() - timedelta(hours=24)

recent_errors = logs[

(logs['level'] == 'ERROR') &

(logs['timestamp'] > cutoff)

]

# Count errors by endpoint

errors_per_endpoint = recent_errors.groupby('endpoint').size().sort_values(ascending=False)

print('Top error-prone endpoints:')

print(errors_per_endpoint.head(10))

# Analyze error patterns over time

logs['hour'] = logs['timestamp'].dt.hour

errors_by_hour = logs[logs['level'] == 'ERROR'].groupby('hour').size()

# Find response time outliers

p95_response = logs['response_time_ms'].quantile(0.95)

slow_requests = logs[logs['response_time_ms'] > p95_response]

print(f'\nSlow requests (>p95): {len(slow_requests)}')

print(slow_requests.groupby('endpoint')['response_time_ms'].mean())This type of analysis helps teams detect patterns, diagnose issues, and understand system behavior.

Data Validation Before Persistence

Before writing to a database, backend engineers frequently validate incoming data. Pandas simplifies this with vectorized operations.

import pandas as pd

import re

def validate_user_data(records: list[dict]) -> tuple[pd.DataFrame, pd.DataFrame]:

"""Validate user records and return valid/invalid DataFrames."""

df = pd.DataFrame(records)

# Define validation rules

email_pattern = r'^[\w.-]+@[\w.-]+\.\w+$'

# Check for missing required fields

missing_email = df['email'].isnull()

missing_name = df['name'].isnull() | (df['name'].str.strip() == '')

# Validate email format

invalid_email = ~df['email'].str.match(email_pattern, na=False)

# Validate age range

invalid_age = (df['age'] < 0) | (df['age'] > 150)

# Combine all validation failures

is_invalid = missing_email | missing_name | invalid_email | invalid_age

valid_df = df[~is_invalid].copy()

invalid_df = df[is_invalid].copy()

# Add validation error messages

invalid_df['errors'] = ''

invalid_df.loc[missing_email, 'errors'] += 'missing_email;'

invalid_df.loc[missing_name, 'errors'] += 'missing_name;'

invalid_df.loc[invalid_email & ~missing_email, 'errors'] += 'invalid_email_format;'

invalid_df.loc[invalid_age, 'errors'] += 'invalid_age;'

return valid_df, invalid_df

# Usage

records = [

{'name': 'Alice', 'email': 'alice@example.com', 'age': 28},

{'name': '', 'email': 'invalid-email', 'age': 35},

{'name': 'Charlie', 'email': None, 'age': 200},

]

valid, invalid = validate_user_data(records)

print(f'Valid records: {len(valid)}')

print(f'Invalid records: {len(invalid)}')With Pandas, you can identify missing fields, inconsistent formats, or invalid values before the data reaches the database layer.

Efficient Aggregations for API Responses

Many backend APIs provide aggregated statistics. Pandas lets you compute these metrics quickly during development.

import pandas as pd

# Load transaction data

transactions = pd.read_parquet('transactions.parquet')

# Daily revenue aggregation

daily_revenue = transactions.groupby(

transactions['timestamp'].dt.date

).agg({

'amount': ['sum', 'mean', 'count'],

'user_id': 'nunique'

}).round(2)

daily_revenue.columns = ['total_revenue', 'avg_transaction', 'transaction_count', 'unique_users']

# Revenue by category with multiple metrics

category_stats = transactions.groupby('category').agg({

'amount': ['sum', 'mean', 'std'],

'transaction_id': 'count'

})

# Pivot table for cross-analysis

pivot = pd.pivot_table(

transactions,

values='amount',

index=transactions['timestamp'].dt.day_name(),

columns='category',

aggfunc='sum',

fill_value=0

)

# Convert to JSON for API response

api_response = daily_revenue.reset_index().to_dict(orient='records')Although production environments should use optimized engines like SQL or dedicated analytics databases, Pandas is ideal for prototyping and validating aggregation logic.

ETL Prototyping and Data Transformations

Before creating Airflow jobs or distributed processing pipelines, backend engineers can use Pandas to test logic and transformations.

import pandas as pd

import numpy as np

def transform_user_activity(raw_df: pd.DataFrame) -> pd.DataFrame:

"""Transform raw user activity into analytics-ready format."""

df = raw_df.copy()

# Parse timestamps

df['event_time'] = pd.to_datetime(df['event_time'])

df['event_date'] = df['event_time'].dt.date

df['event_hour'] = df['event_time'].dt.hour

# Normalize numeric values

df['normalized_duration'] = df['duration_seconds'] / df['duration_seconds'].max()

# Create categorical bins

df['duration_bucket'] = pd.cut(

df['duration_seconds'],

bins=[0, 30, 120, 300, np.inf],

labels=['short', 'medium', 'long', 'extended']

)

# Fill missing values with sensible defaults

df['referrer'] = df['referrer'].fillna('direct')

df['device_type'] = df['device_type'].fillna('unknown')

# Remove duplicates

df = df.drop_duplicates(subset=['user_id', 'event_time', 'event_type'])

# Add derived features

df['is_weekend'] = df['event_time'].dt.dayofweek >= 5

df['is_business_hours'] = df['event_hour'].between(9, 17)

return df

# Test the transformation

raw_data = pd.read_csv('raw_events.csv')

transformed = transform_user_activity(raw_data)

print(f'Transformed {len(transformed)} records')

print(transformed.dtypes)This approach reduces development time and creates a reliable reference implementation before building distributed pipelines.

Working with Large Datasets

Backend engineers often encounter large datasets that do not fit into memory. Although Pandas is memory-bound, it supports several patterns that help manage larger workloads.

import pandas as pd

# Process large CSV in chunks

chunk_size = 100_000

results = []

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

# Process each chunk

filtered = chunk[chunk['status'] == 'completed']

aggregated = filtered.groupby('category')['amount'].sum()

results.append(aggregated)

# Combine results from all chunks

final = pd.concat(results).groupby(level=0).sum()

# Optimize memory with appropriate dtypes

dtype_spec = {

'user_id': 'int32',

'category': 'category', # Much smaller than string for repeated values

'status': 'category',

'amount': 'float32'

}

df = pd.read_csv('data.csv', dtype=dtype_spec)

# Check memory usage

print(df.memory_usage(deep=True))

# Convert existing columns to save memory

df['status'] = df['status'].astype('category')

df['country'] = df['country'].astype('category')Loading data in chunks, using optimized datatypes like category, processing each batch before loading the next, and storing intermediate results in Parquet extends Pandas beyond small datasets.

Integrating Pandas with Backend Systems

Pandas can be integrated into several backend environments depending on project needs.

Using Pandas in API Development

from fastapi import FastAPI, Query

import pandas as pd

from functools import lru_cache

app = FastAPI()

@lru_cache(maxsize=1)

def load_analytics_data():

"""Load and cache analytics data."""

return pd.read_parquet('analytics.parquet')

@app.get('/api/stats/daily')

async def get_daily_stats(

start_date: str = Query(...),

end_date: str = Query(...)

):

df = load_analytics_data()

# Filter by date range

mask = (df['date'] >= start_date) & (df['date'] <= end_date)

filtered = df[mask]

# Calculate statistics

stats = filtered.groupby('date').agg({

'revenue': 'sum',

'orders': 'count',

'users': 'nunique'

}).reset_index()

return stats.to_dict(orient='records')Database Interaction

import pandas as pd

from sqlalchemy import create_engine

engine = create_engine('postgresql://user:pass@localhost/db')

# Read from database

df = pd.read_sql(

'SELECT * FROM users WHERE created_at > %s',

engine,

params=['2024-01-01'],

parse_dates=['created_at']

)

# Write back to database

processed_df.to_sql(

'user_analytics',

engine,

if_exists='append',

index=False,

method='multi' # Batch inserts for better performance

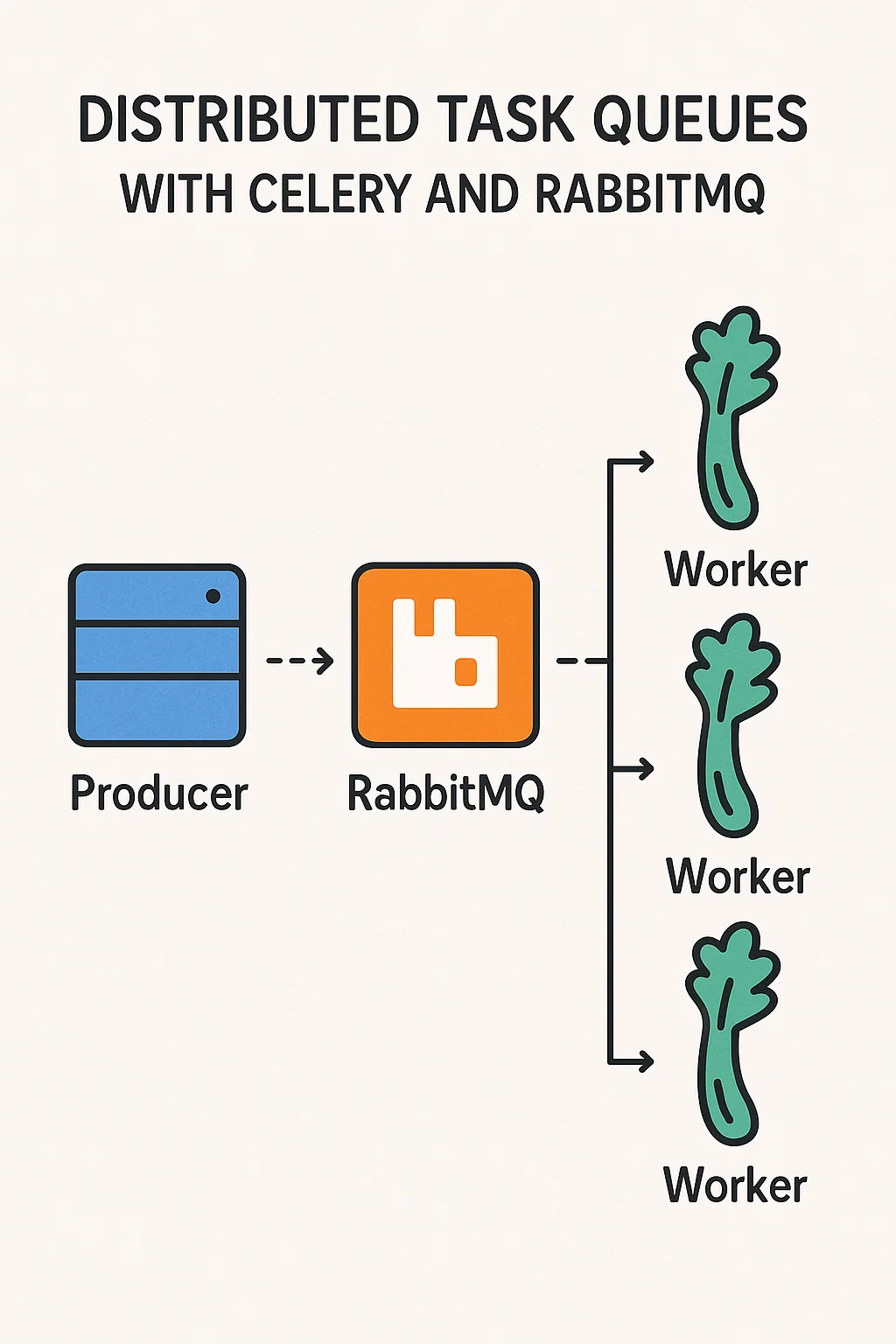

)Combining Pandas with Task Queues

from celery import Celery

import pandas as pd

app = Celery('tasks', broker='redis://localhost:6379')

@app.task

def generate_daily_report(date: str):

"""Generate daily analytics report as background task."""

df = pd.read_parquet(f's3://bucket/events/{date}.parquet')

report = df.groupby('category').agg({

'revenue': ['sum', 'mean'],

'orders': 'count'

})

report.to_parquet(f's3://bucket/reports/{date}.parquet')

return {'status': 'completed', 'rows': len(report)}Real-World Production Scenario

Consider an e-commerce platform with 500,000 daily orders generating logs that need analysis for fraud detection and business reporting. The backend team uses Pandas for multiple workflows.

During incident response, engineers load recent logs into Pandas to identify patterns in failed transactions. The ability to filter, group, and aggregate data interactively helps them pinpoint issues within minutes rather than hours. For weekly reporting, a Celery task uses Pandas to process transaction data, calculate metrics by merchant and category, and generate CSV reports for the finance team.

The team validates all incoming webhook data with Pandas before database insertion. This catches malformed data from third-party integrations early, preventing database constraint violations. When prototyping a new analytics feature, developers use Pandas to test aggregation logic against production data samples before writing optimized SQL queries.

When to Use Pandas

Pandas is ideal for quick insights during development and debugging. Data validation and cleaning before persistence benefits from its expressive API. Log analysis and debugging information processing becomes manageable. ETL workflow prototyping reduces development cycles. Generating summaries before productionizing them elsewhere saves time.

When NOT to Use Pandas

Pandas is not suitable for real-time, low-latency API endpoints where every millisecond matters. Datasets larger than available RAM require distributed processing with Spark or Dask. Production ETL pipelines should use dedicated orchestration tools like Airflow with optimized backends. High-concurrency scenarios where multiple processes need simultaneous data access require databases.

Common Mistakes

Using Python loops instead of vectorized operations defeats the purpose of Pandas. Always prefer built-in methods over iterating row by row.

Loading entire large files into memory crashes applications. Use chunked reading or sample data first to understand memory requirements.

Not specifying dtypes leads to inefficient memory usage. Explicitly define column types, especially for categorical data.

Conclusion

Pandas provides backend engineers with a powerful, flexible toolkit for analyzing data, validating records, debugging issues, and prototyping ETL flows. Its intuitive APIs, fast operations, and rich ecosystem make it ideal for development workflows that require structure and insight. Understanding when Pandas is appropriate and when to use other tools keeps your backend systems efficient and maintainable.

If you want to learn more about advanced backend techniques, read "Advanced Pydantic Validation in FastAPI." For task automation and distributed processing, see "Distributed Task Queues with Celery and RabbitMQ." To explore related concepts in real-time API design, check "WebSocket Servers in Python with FastAPI or Starlette." You can also visit the Pandas documentation for comprehensive API references. Using Pandas effectively helps backend engineers build cleaner, more reliable, and more scalable data workflows.