Database connection pooling is one of those topics that feels optional—until your system falls over. Sudden traffic spikes, slow queries, or scaling application replicas often expose the same underlying problem: too many database connections and not enough coordination.

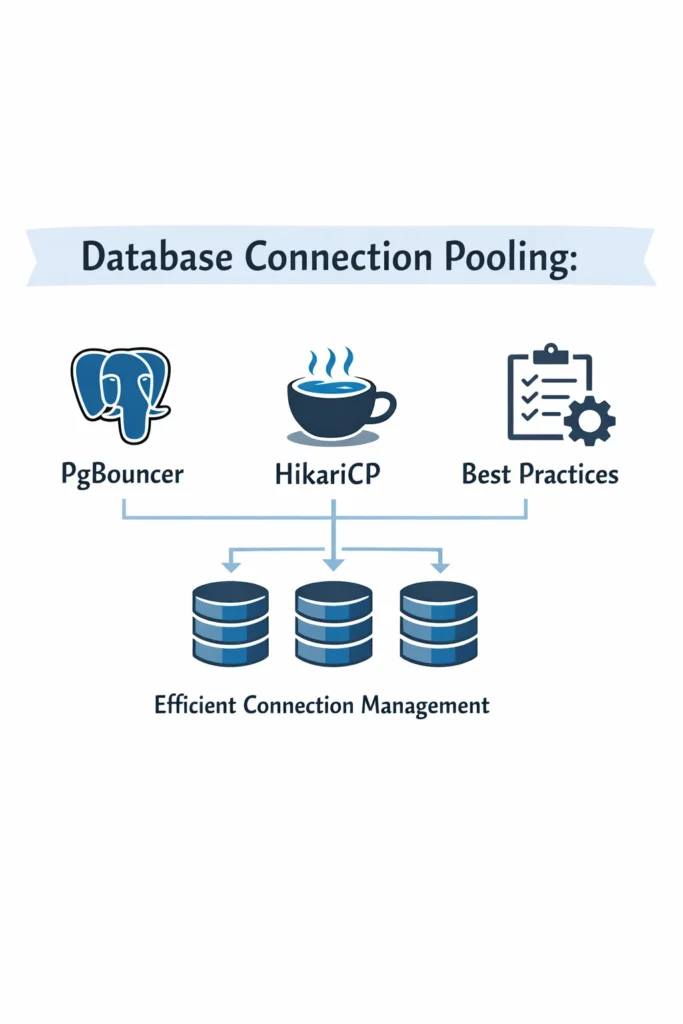

This article explains database connection pooling from a production perspective. You will learn why pooling is necessary, how PgBouncer and HikariCP differ, and which best practices keep systems stable as load increases.

Why Database Connections Are Expensive

Opening a database connection is not cheap. It involves authentication, memory allocation, and backend process setup. When applications open and close connections frequently, databases spend more time managing connections than executing queries.

This problem becomes acute in modern architectures where:

- Applications scale horizontally

- Short-lived requests are common

- ORMs open connections aggressively

If left unchecked, connection storms lead to slowdowns or outright outages—even when queries themselves are fast.

These symptoms often appear alongside performance issues discussed in PostgreSQL performance tuning, where connection pressure amplifies latency problems.

What Connection Pooling Actually Does

A connection pool maintains a fixed number of open database connections and reuses them across requests. Instead of each request opening its own connection, it borrows one from the pool and returns it when finished.

This approach:

- Reduces connection overhead

- Limits concurrent database connections

- Improves latency predictability

Pooling shifts control from the database to the application or a dedicated proxy, which is essential at scale.

Application-Level vs Database-Level Pooling

Connection pooling can exist at different layers.

Application-level pooling lives inside the application process. Each instance manages its own pool.

Database-level pooling uses an external service that sits between the application and the database.

Understanding this distinction is key when comparing HikariCP and PgBouncer.

HikariCP: Fast Application-Level Pooling

HikariCP is a high-performance JDBC connection pool commonly used in Java applications.

It focuses on:

- Minimal overhead

- Fast connection acquisition

- Tight integration with application lifecycle

HikariCP works well when:

- Applications are JVM-based

- Each instance runs a predictable number of threads

- You want fine-grained control per service

However, application-level pools scale linearly with replicas. Ten application instances mean ten separate pools. Without careful sizing, total connections can grow unexpectedly.

This scaling behavior mirrors challenges discussed in microservices scaling patterns, where per-service limits must be coordinated globally.

PgBouncer: Database-Level Pooling

PgBouncer is a lightweight connection pooler that sits in front of PostgreSQL.

Instead of each application managing its own pool, PgBouncer centralizes connection management. Applications connect to PgBouncer, and PgBouncer manages a smaller, controlled set of database connections.

PgBouncer supports different pooling modes:

- Session pooling

- Transaction pooling

- Statement pooling

This flexibility allows PgBouncer to serve many clients with far fewer database connections.

PgBouncer is especially useful when:

- Many application instances connect to one database

- Serverless or short-lived processes are involved

- You want strict global connection limits

PgBouncer vs HikariCP: How to Choose

The choice is not always either–or.

Use HikariCP when:

- You run JVM applications

- Connection counts are predictable

- You need tight control inside the app

Use PgBouncer when:

- You need global connection control

- You have many replicas or services

- You want to protect PostgreSQL from spikes

In many production systems, both are used together: HikariCP manages a small local pool, and PgBouncer enforces global limits.

This layered approach resembles patterns discussed in API gateway architectures, where responsibility is split across layers for safety.

Common Pooling Configuration Mistakes

Most pooling issues come from configuration, not tooling.

Common mistakes include:

- Setting pool sizes too high

- Ignoring database connection limits

- Using default settings in production

- Not monitoring pool saturation

A pool that is too large increases contention and memory usage. A pool that is too small causes request queuing and latency spikes.

Finding the right balance requires observing real traffic patterns.

Pool Size Is Not Throughput

A common misconception is that more connections equal more performance.

In reality, databases often perform better with fewer, well-utilized connections. Beyond a certain point, additional connections increase context switching and lock contention.

This principle aligns with lessons from profiling CPU and memory usage, where saturation—not raw capacity—is the real bottleneck.

Transaction Pooling and Its Trade-offs

PgBouncer’s transaction pooling mode is powerful but comes with constraints.

In transaction pooling:

- Connections are assigned per transaction, not per session

- Session-level state is not preserved

This improves efficiency but breaks assumptions made by some ORMs and features like prepared statements or session variables.

Before enabling transaction pooling, ensure your application does not rely on session state.

Connection Pooling and Migrations

Connection pooling interacts closely with database migrations.

Long-running migrations can exhaust pools, block connections, or cause cascading failures if not coordinated.

Strategies discussed in database migrations in production apply here as well: limit concurrency, schedule heavy operations carefully, and monitor pool health during changes.

Observability: Knowing When Pools Are the Problem

Without visibility, connection pooling issues are hard to diagnose.

Key metrics to monitor include:

- Active vs idle connections

- Wait time for connection acquisition

- Pool exhaustion events

- Database-side connection counts

These metrics often reveal problems long before users notice errors.

Observability practices from monitoring and logging in microservices are especially relevant here.

A Realistic Connection Pooling Scenario

Consider a service scaled to handle peak traffic. During a marketing campaign, replicas double. Each instance uses a generous pool size.

Suddenly, PostgreSQL reaches its connection limit. New requests hang or fail, even though CPU usage is low.

Introducing PgBouncer with conservative limits stabilizes the system. Connection counts flatten, latency normalizes, and scaling becomes predictable again.

The database did not change—only connection management did.

When Connection Pooling Matters Most

Connection pooling is critical when:

- Traffic is bursty

- Applications scale dynamically

- Databases serve multiple services

- Stability matters more than raw throughput

When Pooling Still Needs Care

Pooling does not solve:

- Slow queries

- Lock contention

- Poor indexing

It masks symptoms but does not replace query optimization. Pooling and performance tuning must work together.

Conclusion

Database connection pooling is foundational infrastructure, not an optimization detail. PgBouncer and HikariCP solve different problems at different layers, and understanding those layers is key to building stable systems.

A practical next step is to inventory your current connection usage. Count pools, sum maximum connections, and compare that number to what your database can safely handle. Most production issues start there—and many end once pooling is configured intentionally.