AI tools are becoming integral to modern software development. From code generation to automated reviews, they save time and boost productivity. However, this power brings responsibilities that developers and organizations cannot ignore.

The ethical implications of using AI in coding extend beyond technical considerations. Privacy, bias, intellectual property, and accountability all require careful attention. Understanding these issues helps teams use AI responsibly while maximizing its benefits.

In this article, we’ll explore the key ethical considerations when using AI tools in coding, examine real-world scenarios where these issues arise, and provide actionable best practices for responsible AI adoption.

Why Ethics Matter in AI-Assisted Coding

AI coding tools operate differently from traditional development aids. They learn from vast datasets of existing code, generate novel outputs, and make suggestions that developers may accept without full understanding. This creates unique ethical challenges.

Scale of Impact

When a developer writes problematic code manually, the impact is limited to their own work. When AI generates similar code for thousands of developers simultaneously, the impact scales dramatically. A single bias or vulnerability in AI suggestions can propagate across countless projects.

Opacity of Decisions

AI suggestions arrive without explanation of their origin or reasoning. Developers often cannot determine whether generated code reflects best practices, outdated patterns, or potentially problematic approaches without careful review.

Speed of Adoption

The rapid adoption of AI coding tools has outpaced development of ethical frameworks and organizational policies. Many teams use these tools without clear guidelines, creating risk exposure they may not recognize.

Data Privacy Concerns

AI tools often process source code that contains sensitive information. Understanding how your code is handled is essential for maintaining security and compliance.

Proprietary Code Exposure

When you use cloud-based AI coding assistants, your code may be sent to external servers for processing. This raises immediate concerns:

- Trade secrets: Algorithms, business logic, and competitive advantages embedded in code could be exposed

- Customer data: Hardcoded credentials, API keys, or sample data in code comments may be transmitted

- Security vulnerabilities: Security-related code patterns reveal potential attack vectors

Training Data Policies

Some AI providers use submitted code to improve their models. This means your proprietary code might influence future suggestions to other users. Even if not directly exposed, patterns and approaches unique to your organization could leak indirectly.

# Before sending to AI: Check what you're exposing

# Problematic - contains sensitive information

def connect_database():

return psycopg2.connect(

host="prod-db.internal.company.com",

password="actual_production_password", # Never in code anyway!

user="admin"

)

# Better - use environment variables, sanitize before AI assistance

def connect_database():

return psycopg2.connect(

host=os.environ.get("DB_HOST"),

password=os.environ.get("DB_PASSWORD"),

user=os.environ.get("DB_USER")

)

Compliance Requirements

Many organizations operate under regulatory frameworks (GDPR, HIPAA, PCI-DSS) that restrict how data can be processed and where it can be sent. Using external AI services may violate these requirements, even unintentionally.

Consider whether your AI tool provider offers:

- Data processing agreements (DPAs)

- Regional data residency options

- Enterprise agreements with enhanced privacy protections

- Self-hosted deployment options

Copyright and Licensing Issues

AI-generated code raises complex intellectual property questions that the legal system is still working to resolve. Developers need awareness of these issues even without definitive answers.

Training Data Origins

AI models are trained on vast codebases that include open-source projects under various licenses. When AI generates code similar to training examples, questions arise:

- Does generated code inherit the license of similar training examples?

- Can copyleft licenses (GPL) propagate through AI generation?

- What constitutes “substantial similarity” in AI-generated code?

License Contamination Risk

Consider this scenario: Your AI assistant generates a function that closely matches GPL-licensed code from its training data. Using this in proprietary software could create legal liability. Without reviewing training data (which you cannot do), this risk is difficult to assess.

// AI might generate code very similar to this GPL-licensed function

// from a popular open-source project

function quickSort(arr, low, high) {

if (low < high) {

const pivotIndex = partition(arr, low, high);

quickSort(arr, low, pivotIndex - 1);

quickSort(arr, pivotIndex + 1, high);

}

return arr;

}

// Question: Is this a common algorithm implementation

// or a derivative of specific GPL code?

Attribution Challenges

Many open-source licenses require attribution. When AI generates code influenced by multiple sources, proper attribution becomes impossible. Even with good intentions, you cannot credit original authors when you don't know who they are.

Practical Mitigations

- Use AI tools that filter training data for license compliance

- Run generated code through license scanning tools

- Document AI assistance in your development process

- Consult legal counsel for high-stakes projects

Bias in AI Models

AI coding tools inherit biases from their training data. These biases can perpetuate poor practices, reinforce outdated patterns, and even introduce discriminatory elements into software.

Code Quality Bias

Training data includes code of varying quality. AI may suggest patterns that, while common, represent suboptimal practices. Examples include:

- Security anti-patterns that appear frequently in older code

- Deprecated API usage that hasn't been updated in training data

- Inefficient algorithms popular before better alternatives emerged

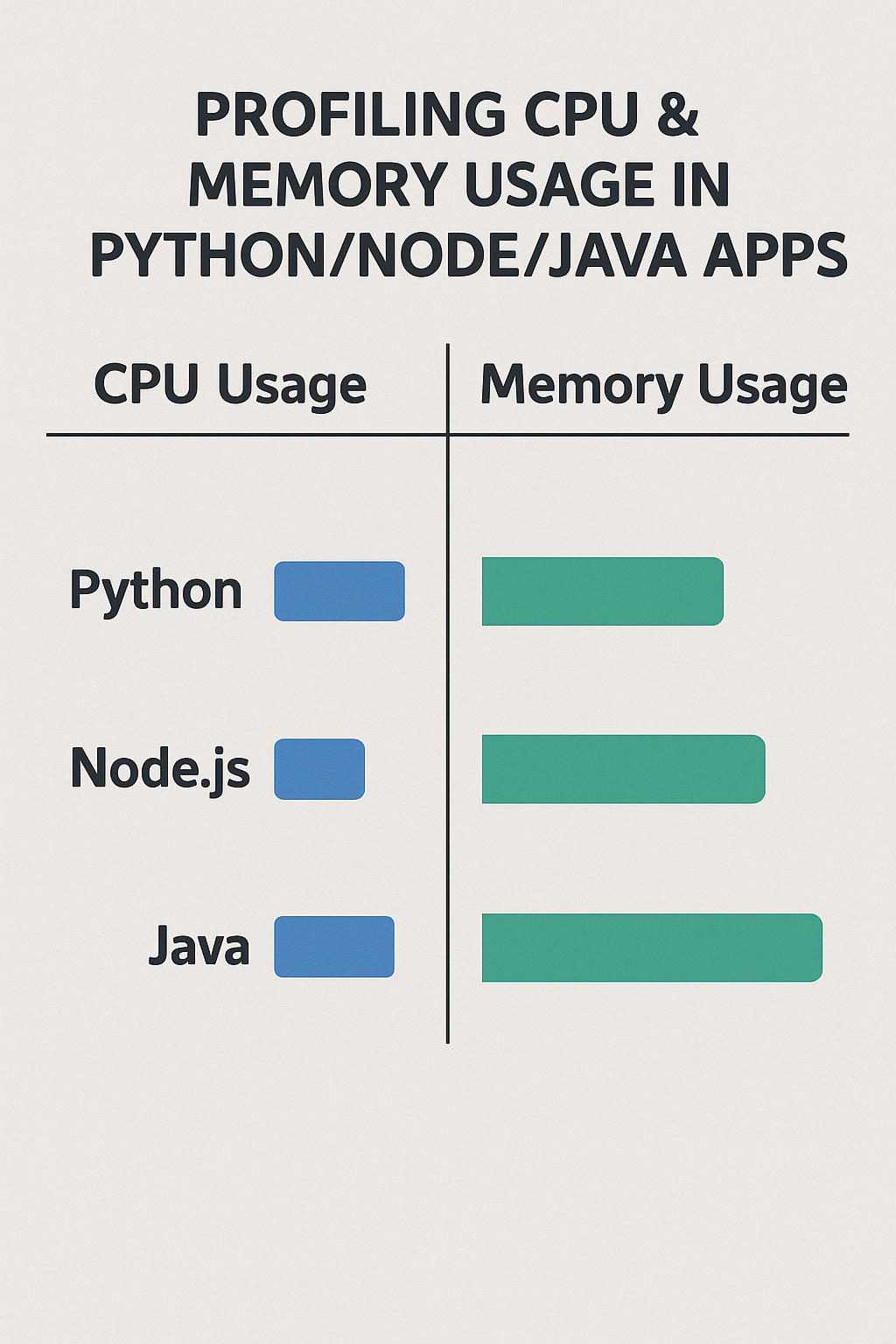

Representation Bias

Training data over-represents certain programming languages, frameworks, and coding styles. AI suggestions may be less reliable for:

- Less popular programming languages

- Newer frameworks with limited training examples

- Domain-specific coding patterns with niche applications

Societal Bias

More concerning, AI can perpetuate societal biases present in training data. Variable names, comments, and example data might reflect stereotypes or assumptions that shouldn't appear in production code.

# AI might generate examples with biased assumptions

# Problematic - assumes gender binary, reinforces stereotypes

def calculate_salary(employee):

base = 50000

if employee.gender == "male":

return base * 1.15 # Historical bias in training data

return base

# Better - no discriminatory factors

def calculate_salary(employee):

return employee.base_salary + calculate_performance_bonus(employee)

Detecting Bias

Regularly audit AI-generated code for:

- Gendered or culturally-specific variable names

- Assumptions about users embedded in logic

- Default values that may not apply universally

- Test data that reflects stereotypes

Over-Reliance on AI

AI assistance can undermine the very skills that make developers effective. Recognizing these risks helps maintain healthy human-AI collaboration.

Skill Atrophy

Developers who accept AI suggestions without understanding them may lose the ability to solve problems independently. When AI fails (and it will), these developers lack the foundational skills to proceed.

Reduced Critical Thinking

The convenience of AI suggestions can reduce the critical evaluation that catches bugs and security issues. "The AI wrote it" becomes an implicit endorsement that bypasses normal review rigor.

Understanding Gaps

Code you don't write is code you may not understand. AI-generated code that "works" but isn't understood creates maintenance challenges and debugging difficulties later.

Healthy Practices

- Understand before accepting: Review AI suggestions as carefully as human-written code

- Practice without AI: Regularly solve problems independently to maintain skills

- Question suggestions: Ask why AI recommends specific approaches

- Verify correctness: Test AI-generated code thoroughly, don't trust it implicitly

Accountability Challenges

When AI-generated code causes problems, determining responsibility becomes complicated. Clear accountability structures are essential for responsible AI use.

The Accountability Gap

If AI-generated code introduces a security vulnerability that leads to a data breach, who bears responsibility?

- The developer who accepted the suggestion?

- The organization that approved AI tool usage?

- The AI provider whose model generated the code?

- The reviewers who approved the pull request?

Most AI tool terms of service explicitly disclaim liability for generated output, placing responsibility on users.

Establishing Clear Responsibility

Organizations should establish policies that clarify:

- Developers remain responsible for all code they commit, regardless of origin

- AI-generated code requires the same review standards as human-written code

- Documentation of AI usage supports incident investigation

- Security and quality gates apply equally to AI-generated code

Documentation Practices

Track AI usage to support accountability:

# Git commit message indicating AI assistance

git commit -m "feat: Add user validation logic

AI-assisted: GitHub Copilot used for initial implementation

Reviewed and modified by: [Developer Name]

Changes from AI suggestion: Added rate limiting, fixed SQL injection risk"

Best Practices for Ethical AI Use

Responsible AI adoption requires intentional practices at both individual and organizational levels.

Individual Developer Practices

- Review AI outputs carefully: Never accept code suggestions blindly. Understand what the code does before committing.

- Verify security implications: Check AI-generated code for vulnerabilities, especially in authentication, data handling, and input validation.

- Test thoroughly: AI-generated code needs the same (or more) testing as human-written code.

- Maintain expertise: Continue learning and practicing without AI to maintain foundational skills.

Organizational Practices

- Establish clear policies: Define guidelines for AI tool usage, including approved tools, use cases, and restrictions.

- Protect sensitive data: Identify which repositories and code types cannot be processed by external AI services.

- Evaluate providers: Assess AI tools for privacy policies, data handling, and security practices before adoption.

- Train developers: Ensure teams understand ethical considerations and organizational policies.

Code Review Adaptation

Adapt review processes for AI-assisted development:

- Ask whether AI assistance was used during review

- Pay extra attention to security-sensitive AI-generated code

- Verify that developers understand code they're submitting

- Check for license-problematic patterns in generated code

Balancing Productivity with Responsibility

Ethics and productivity aren't mutually exclusive. The goal is using AI effectively while maintaining responsibility.

The Hybrid Approach

The most sustainable model treats AI as an assistant rather than a replacement. AI handles routine tasks while humans provide judgment, creativity, and ethical oversight.

Appropriate Use Cases

Good fits for AI assistance:

- Boilerplate code generation

- Documentation drafting

- Test scaffolding

- Syntax lookup and examples

Requires extra caution:

- Security-critical code

- Financial calculations

- Privacy-sensitive data handling

- Compliance-related functionality

Conclusion

The rise of AI in coding creates both opportunities and responsibilities. By understanding the ethical considerations when using AI tools in coding, developers can build better software while respecting privacy, fairness, and intellectual property.

Success requires balance: leveraging AI for productivity while maintaining human oversight for quality and ethics. Establish clear policies, review AI outputs carefully, and keep developing the skills that make AI assistance valuable rather than risky.

For more on practical AI use in development, see our guide on Building Custom GPT Models for Your Team. To explore how AI is reshaping development workflows, read about The Future of AI in Software Development.

1 Comment