Introduction

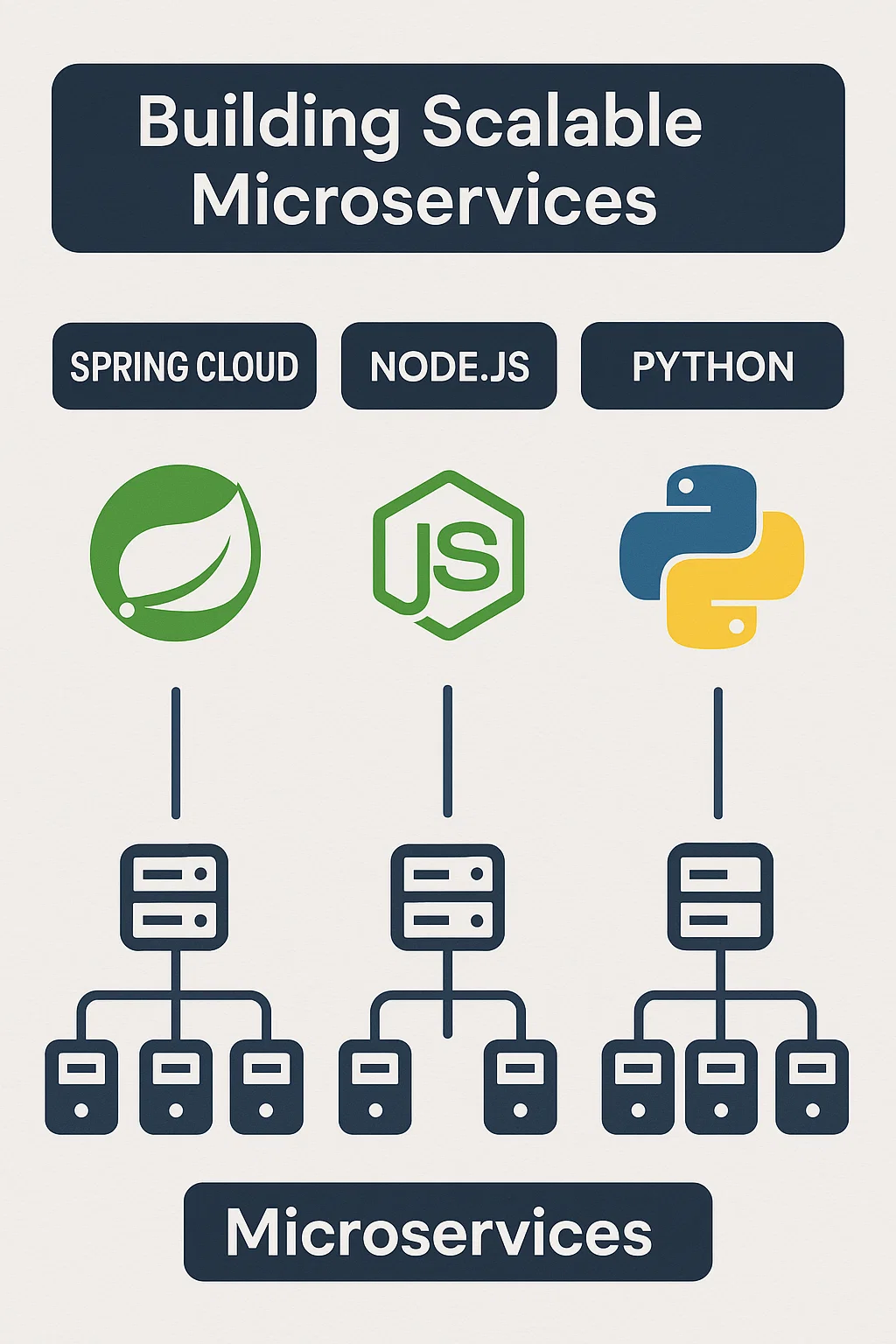

Modern applications demand scalability, resilience, and real-time responsiveness. Traditional synchronous architectures struggle under these requirements—when one service is slow or down, the entire request chain suffers. Event-driven architectures (EDA) have emerged as a key approach to meeting these needs, and Apache Kafka has become the de facto platform for building event-driven microservices at scale. Companies like LinkedIn, Netflix, and Uber process billions of events daily through Kafka, powering everything from real-time analytics to user notifications to distributed transactions. This comprehensive guide explores how to design microservices with Kafka, covering core concepts, practical implementation patterns, common pitfalls, and battle-tested best practices to help you build scalable distributed systems.

Why Event-Driven Microservices?

Event-driven microservices rely on events (immutable records of state changes) to communicate instead of synchronous API calls. When something happens in one service, it publishes an event; interested services consume that event and react accordingly. This fundamental shift in communication patterns unlocks several architectural benefits.

// Synchronous approach - tight coupling

async function processOrder(orderId: string) {

const order = await orderService.getOrder(orderId);

await inventoryService.reserve(order.items); // Blocks if slow

await paymentService.charge(order.total); // Blocks if slow

await shippingService.schedule(order); // Blocks if slow

await notificationService.sendConfirmation(); // Blocks if slow

// If any service fails, entire operation fails

}

// Event-driven approach - loose coupling

async function processOrder(orderId: string) {

const order = await orderService.getOrder(orderId);

await kafka.produce('order-created', {

orderId: order.id,

items: order.items,

customerId: order.customerId,

total: order.total,

timestamp: Date.now()

});

// Other services react independently to the event

}

The event-driven approach decouples services for better scalability—each service can scale independently based on its workload. It improves resilience by avoiding direct dependencies—if the notification service is temporarily down, orders still process. And it enables real-time data flows for analytics, monitoring, and notifications that weren’t possible with synchronous architectures.

Understanding Kafka Core Concepts

Before designing event-driven systems, you need to understand Kafka’s fundamental components:

Topics and Partitions

Topics are named channels for events. Each topic is divided into partitions for parallelism and ordering guarantees.

// Topic: order-events with 6 partitions

// Events are distributed across partitions by key

// Partition 0: [order-001, order-007, order-013...]

// Partition 1: [order-002, order-008, order-014...]

// Partition 2: [order-003, order-009, order-015...]

// ...

// Key-based partitioning ensures ordering per key

await producer.send({

topic: 'order-events',

messages: [{

key: order.id, // Same order ID always goes to same partition

value: JSON.stringify(event),

headers: {

'event-type': 'OrderCreated',

'correlation-id': correlationId

}

}]

});

Consumer Groups

Consumer groups enable parallel processing while ensuring each event is processed once per group.

// Multiple instances of inventory-service form a consumer group

// Each partition is assigned to exactly one consumer in the group

const consumer = kafka.consumer({ groupId: 'inventory-service' });

await consumer.connect();

await consumer.subscribe({ topic: 'order-events', fromBeginning: false });

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

const event = JSON.parse(message.value.toString());

// Only this consumer instance handles this partition's events

await processOrderEvent(event);

// Commit offset after successful processing

await consumer.commitOffsets([{

topic,

partition,

offset: (parseInt(message.offset) + 1).toString()

}]);

}

});

Benefits of Kafka in Microservices

Massive Scalability: Kafka handles millions of events per second across partitions. LinkedIn processes over 7 trillion messages per day through their Kafka infrastructure.

Durability and Replay: Events are persisted to disk and replicated across brokers. You can replay events from any point in time for recovery, debugging, or reprocessing.

True Decoupling: Producers and consumers don’t need to know about each other. New consumers can be added without modifying producers.

Streaming Analytics: Native integration with stream processing frameworks like Kafka Streams, Apache Flink, and ksqlDB for real-time transformations and aggregations.

Polyglot Support: Client libraries exist for Java, Python, Go, Node.js, Rust, and most major languages.

Event Design Patterns

How you structure events significantly impacts system flexibility and maintainability.

Event Types

// Domain Event - represents what happened

interface OrderCreatedEvent {

eventType: 'OrderCreated';

eventId: string;

timestamp: number;

aggregateId: string; // Order ID

payload: {

customerId: string;

items: OrderItem[];

totalAmount: number;

shippingAddress: Address;

};

metadata: {

correlationId: string;

causationId: string;

version: number;

};

}

// Change Data Capture Event - represents state change

interface UserUpdatedEvent {

eventType: 'UserUpdated';

before: User | null; // Previous state

after: User; // Current state

op: 'c' | 'u' | 'd'; // Create, Update, Delete

source: {

table: string;

database: string;

timestamp: number;

};

}

Event Schema with Avro

// schema/order-created.avsc

{

"type": "record",

"name": "OrderCreated",

"namespace": "com.example.orders",

"fields": [

{ "name": "eventId", "type": "string" },

{ "name": "orderId", "type": "string" },

{ "name": "customerId", "type": "string" },

{ "name": "totalAmount", "type": "double" },

{ "name": "currency", "type": "string", "default": "USD" },

{ "name": "createdAt", "type": "long", "logicalType": "timestamp-millis" },

{

"name": "items",

"type": {

"type": "array",

"items": {

"type": "record",

"name": "OrderItem",

"fields": [

{ "name": "productId", "type": "string" },

{ "name": "quantity", "type": "int" },

{ "name": "unitPrice", "type": "double" }

]

}

}

}

]

}

Common Pitfalls and How to Avoid Them

While powerful, designing event-driven systems with Kafka comes with challenges:

Event Schema Evolution

Without proper contracts, breaking changes can cascade through your system.

// BAD: Breaking change - renaming field

// Old: { customerId: "123" }

// New: { userId: "123" } // Breaks all consumers!

// GOOD: Backward-compatible evolution

// Add new field with default, deprecate old field

{

"customerId": "123", // Deprecated but still present

"userId": "123" // New field

}

// Use Schema Registry to enforce compatibility

// Configure: BACKWARD, FORWARD, or FULL compatibility mode

Handling Duplicate Events

Network issues and retries mean consumers must handle duplicates gracefully.

// Idempotent consumer pattern

async function processPaymentEvent(event: PaymentEvent) {

// Check if already processed using event ID

const processed = await redis.get(`processed:${event.eventId}`);

if (processed) {

console.log(`Event ${event.eventId} already processed, skipping`);

return;

}

// Process within transaction

await db.transaction(async (tx) => {

await tx.payments.create(event.payload);

// Mark as processed atomically

await redis.set(`processed:${event.eventId}`, '1', 'EX', 86400 * 7);

});

}

Ordering Guarantees

Kafka only guarantees ordering within a partition, not across partitions.

// Events for same entity must use same partition key

// to maintain ordering

// CORRECT: Order events for order-123 always ordered

await producer.send({

topic: 'order-events',

messages: [{ key: 'order-123', value: event1 }]

});

await producer.send({

topic: 'order-events',

messages: [{ key: 'order-123', value: event2 }]

});

// event1 always processed before event2

// WRONG: No key means random partition, no ordering guarantee

await producer.send({

topic: 'order-events',

messages: [{ value: event1 }] // No key!

});

Best Practices for Event-Driven Microservices

Use Schema Registry: Enforce strong typing with Avro, Protobuf, or JSON Schema. This catches breaking changes before they reach production.

Design Idempotent Consumers: Every consumer must handle duplicate events safely. Use event IDs and idempotency keys.

Partition Strategically: Choose partition keys that balance throughput with ordering requirements. Too few partitions limit parallelism; too many increase overhead.

Implement Observability: Use distributed tracing with correlation IDs, monitor consumer lag, and set up alerts for processing delays.

// Include tracing context in every event

interface EventMetadata {

eventId: string;

correlationId: string; // Trace across services

causationId: string; // What caused this event

timestamp: number;

source: string; // Producing service

version: number; // Schema version

}

Plan for Schema Evolution: Design events with future changes in mind. Use optional fields, avoid removing fields, and version your schemas.

Implement Dead Letter Queues: Events that fail processing repeatedly should go to a DLQ for investigation rather than blocking the pipeline.

When to Use Kafka vs Alternatives

Not every system needs Kafka’s power and complexity.

Choose Kafka for: High-throughput distributed event streaming at scale, event sourcing and CQRS patterns, real-time data pipelines and analytics, systems requiring event replay capability.

Consider RabbitMQ for: Lightweight messaging patterns, complex routing requirements, lower operational overhead, when you don’t need event persistence.

Use REST/gRPC when: Synchronous request-response flows are required, you need immediate consistency guarantees, the system is simple enough not to need asynchronous patterns.

Conclusion

Designing event-driven microservices with Kafka helps teams build systems that are fast, reliable, and easy to scale. The architecture enables loose coupling between services, real-time data flows, and resilience against individual service failures. The most important things to get right from the start are clear event structures with proper schema management, idempotent consumers that handle duplicates safely, strategic partitioning for ordering guarantees, and comprehensive observability for debugging distributed flows. While Kafka adds operational complexity, the benefits of scalability and decoupling make it worthwhile for systems that need to handle high throughput and real-time processing. If you’re interested in protocol comparisons for your APIs, check out REST vs GraphQL vs gRPC: Selecting the Right Protocol. To dive deeper into advanced Kafka usage and cluster operations, explore the Apache Kafka Documentation.

2 Comments