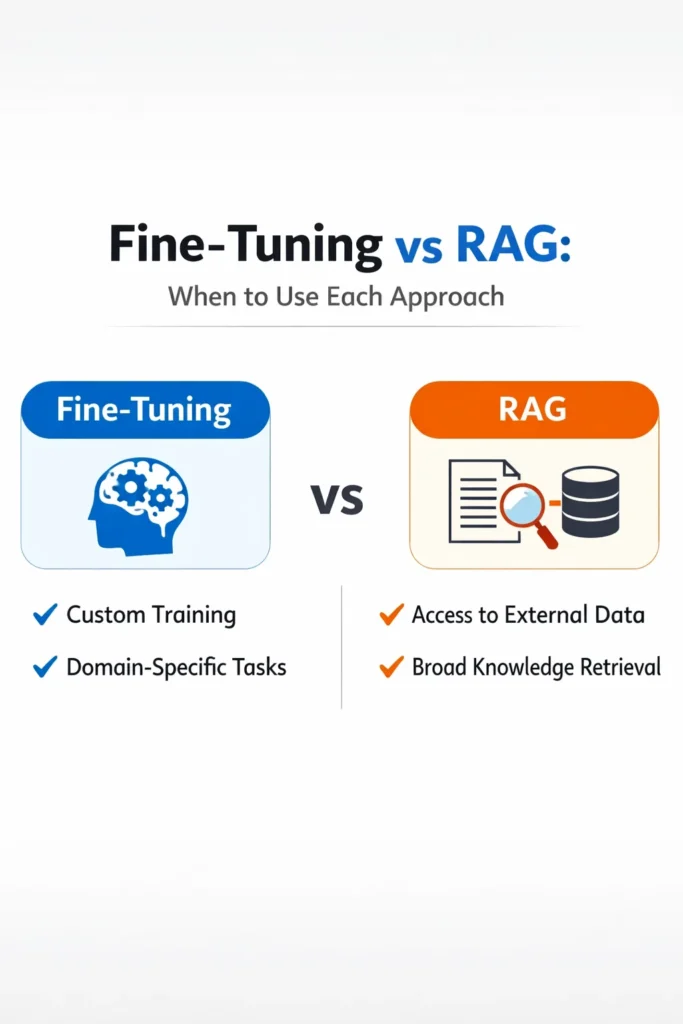

As large language models mature, developers face a recurring question: should you fine-tune a model, or should you use retrieval-augmented generation (RAG)? Both approaches improve model behavior, but they solve different problems. Choosing the wrong one leads to unnecessary complexity, higher costs, or brittle systems.

This guide explains fine-tuning vs RAG from a practical, production-focused perspective. You will learn what each approach actually does, where it shines, where it breaks down, and how to decide which one fits your use case.

Why This Choice Matters

At a glance, fine-tuning and RAG seem interchangeable. Both promise better answers, more relevance, and greater control. In practice, they operate at entirely different layers of the system.

Fine-tuning changes how the model behaves.

RAG changes what information the model sees.

Understanding this distinction early prevents architectural mistakes that are expensive to undo later.

If you have already explored RAG from scratch, you have seen how retrieval grounds model output in external knowledge. Fine-tuning takes a different path.

What Fine-Tuning Actually Does

Fine-tuning adjusts a model’s internal weights using additional training data. The goal is to make the model behave more consistently for a specific task, style, or domain.

Fine-tuning is well suited for:

- Consistent tone or formatting

- Domain-specific language patterns

- Classification or transformation tasks

- Reducing prompt complexity

After fine-tuning, the model “knows” how to respond without being reminded every time.

However, fine-tuning does not teach the model new, frequently changing facts. Once trained, the model’s knowledge is static until retrained.

What RAG Actually Does

RAG does not modify the model. Instead, it retrieves relevant context at runtime and injects it into the prompt.

This makes RAG ideal for:

- Frequently changing data

- Large knowledge bases

- Auditable, source-backed answers

- Reducing hallucinations

Because retrieval happens at request time, updating knowledge means re-indexing data, not retraining models.

If you are already designing systems with structured prompts and tools, ideas from prompt engineering best practices for developers apply directly to RAG pipelines as well.

Fine-Tuning vs RAG: Core Differences

The most important differences are architectural, not conceptual.

Fine-tuning:

- Alters model behavior permanently

- Requires curated training data

- Is slower to iterate

- Excels at style and task consistency

RAG:

- Injects knowledge dynamically

- Requires retrieval infrastructure

- Is faster to update

- Excels at factual accuracy and freshness

Because of this, the two approaches are not competitors. They are complementary.

A Realistic Decision Scenario

Consider a support chatbot for a SaaS product.

The chatbot must:

- Answer questions about current documentation

- Follow a consistent, professional tone

- Output responses in a specific structure

Using fine-tuning alone fails because documentation changes weekly. The model would constantly drift out of date.

Using RAG alone works for knowledge accuracy, but responses vary in tone and structure.

The correct solution is a hybrid approach: fine-tune the model for tone and response format, and use RAG to supply up-to-date knowledge. This pattern appears repeatedly in production systems.

When Fine-Tuning Is the Right Choice

Fine-tuning is a good fit when:

- Output style must be consistent

- The task is narrow and well-defined

- Domain language is specialized

- Prompt complexity is becoming unmanageable

Examples include sentiment classification, structured extraction, or branded response generation.

When Fine-Tuning Is the Wrong Choice

Avoid fine-tuning when:

- Data changes frequently

- You need traceable sources

- Training data is limited or noisy

- Iteration speed is critical

In these cases, fine-tuning adds rigidity without solving the core problem.

When RAG Is the Right Choice

RAG excels when:

- Knowledge updates frequently

- Answers must be grounded in sources

- You need transparency and auditability

- Data volume exceeds prompt limits

This is why RAG is commonly used for internal documentation, search assistants, and knowledge-based chatbots.

When RAG Is the Wrong Choice

RAG is not ideal when:

- The task is purely creative

- Output structure must be identical every time

- Latency budgets are extremely tight

- Retrieval infrastructure adds unnecessary overhead

In these cases, RAG may introduce more complexity than value.

Cost, Latency, and Maintenance Trade-offs

Fine-tuning incurs upfront cost and ongoing retraining overhead. However, inference can be cheaper because prompts are shorter.

RAG adds runtime cost due to retrieval and larger prompts. However, maintenance is often easier because updating data does not require retraining.

From a systems perspective, this trade-off mirrors decisions discussed in REST vs GraphQL vs gRPC, where flexibility and structure must be balanced deliberately.

Hybrid Approaches Are the Norm

In mature systems, fine-tuning and RAG are rarely used in isolation.

A common architecture looks like this:

- Fine-tuned model for tone, structure, and behavior

- RAG pipeline for factual grounding

- Tools for deterministic actions

- Streaming for better UX

This layered approach keeps each component focused on what it does best.

Common Mistakes When Choosing Between Fine-Tuning and RAG

One common mistake is fine-tuning to “add knowledge.” This fails quickly as data changes.

Another is using RAG to enforce formatting or tone. Retrieval does not guarantee stylistic consistency.

Finally, many teams choose based on hype rather than requirements. Both approaches are powerful, but neither is universally correct.

Conclusion

Fine-tuning vs RAG is not an either–or decision. Fine-tuning shapes how a model behaves. RAG controls what it knows at runtime. The correct choice depends on whether your problem is behavioral, informational, or both.

A practical next step is to map your requirements explicitly. If your problem is about consistency, start with fine-tuning. If it is about knowledge freshness, start with RAG. In many real systems, the optimal solution combines both.

1 Comment