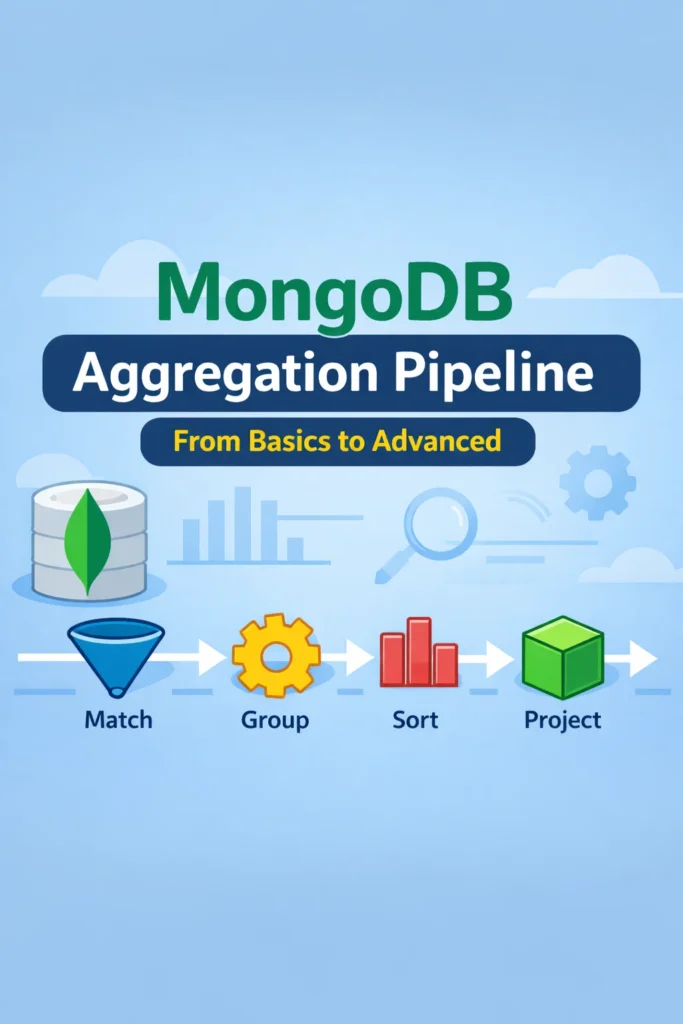

MongoDB’s aggregation pipeline is one of its most powerful features—and one of the most misunderstood. It allows you to transform, filter, group, and compute data directly inside the database, often replacing complex application-side logic.

This article walks through the MongoDB aggregation pipeline from first principles to advanced usage. The goal is not to memorize operators, but to understand how to think in pipelines and design aggregations that are readable, efficient, and production-safe.

Why the Aggregation Pipeline Exists

MongoDB documents are flexible by design. That flexibility makes simple queries easy, but real applications need reporting, analytics, and data shaping that go beyond basic filters.

The aggregation pipeline solves this by letting you process documents step by step. Each stage takes the output of the previous stage and transforms it further. Instead of writing nested loops in application code, you describe what you want to happen to the data.

If this idea feels familiar, it mirrors how SQL queries are composed and optimized. Many of the same performance principles discussed in PostgreSQL performance tuning apply here as well, even though the syntax is different.

How the Aggregation Pipeline Works

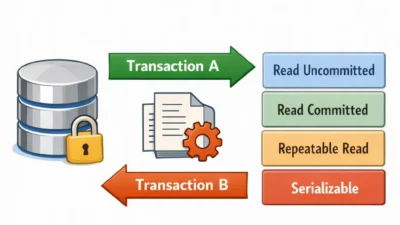

An aggregation pipeline is an ordered list of stages. Documents flow through the pipeline from top to bottom.

Each stage:

- Receives documents from the previous stage

- Applies a transformation

- Outputs a new stream of documents

This model encourages clarity. Instead of doing everything at once, each transformation is explicit.

Understanding this flow is the key to writing maintainable aggregations.

Core Aggregation Stages You Must Know

Some stages appear in almost every real-world pipeline.

$match: Filtering Early

$match filters documents, similar to a WHERE clause in SQL. Placing $match as early as possible reduces the amount of data processed by later stages.

Early filtering is one of the most important performance optimizations in MongoDB aggregations.

$project: Shaping Documents

$project controls which fields are included, excluded, or computed. It allows you to rename fields, calculate derived values, and reduce payload size.

Overusing $project late in the pipeline often hides inefficiencies. Thoughtful projection early improves both performance and readability.

$group: Aggregation and Reduction

$group is where aggregation happens. It allows you to compute totals, averages, counts, and more by grouping documents on a key.

Because $group reshapes data entirely, it is usually one of the most expensive stages. Designing grouping keys carefully matters a lot.

$sort, $limit, and $skip

Sorting and pagination stages control ordering and result size. Sorting large datasets without proper indexing can be expensive, so these stages should be used intentionally.

These concerns echo patterns discussed in database migrations in production, where understanding data size and access patterns is critical before making changes.

Thinking in Pipelines Instead of Queries

A common mistake is trying to replicate application logic inside a single aggregation stage. This usually leads to unreadable pipelines.

A better approach is to think incrementally:

- Filter the dataset

- Shape the data

- Aggregate or enrich

- Finalize the output

This mindset makes pipelines easier to reason about and debug.

Joining Data with $lookup

$lookup allows MongoDB to join collections. While MongoDB is not a relational database, $lookup enables many relational-style patterns when used carefully.

Used sparingly, $lookup simplifies data access. Overused, it can become a performance bottleneck.

If your pipeline relies heavily on $lookup, it is often worth revisiting your data model or considering whether a relational database might be a better fit. Trade-offs like these are explored in PostgreSQL JSONB best practices, where hybrid modeling decisions are common.

Advanced Pipeline Patterns

As pipelines grow more complex, certain patterns emerge.

Faceted Aggregations with $facet

$facet allows you to run multiple sub-pipelines in parallel on the same input. This is useful for dashboards where multiple metrics are derived from the same dataset.

Conditional Logic with $cond and $switch

Conditional expressions allow pipelines to branch logic without leaving the database. This is powerful but should be used carefully to avoid unreadable stages.

Array Processing with $unwind

$unwind expands arrays into individual documents. It is often required before grouping or filtering array elements, but it can multiply document counts dramatically.

Understanding the impact of $unwind is critical for performance-sensitive pipelines.

Performance Considerations in Aggregations

Aggregation pipelines can be fast, but only when designed correctly.

Key performance guidelines include:

- Filter as early as possible

- Limit fields before expensive stages

- Avoid unnecessary

$lookupoperations - Use indexes that support

$matchand$sortstages

MongoDB provides explain() for aggregations, which is essential for understanding execution behavior. This practice aligns closely with ideas from profiling CPU and memory usage in backend applications, where visibility drives optimization.

A Realistic Aggregation Use Case

Imagine an analytics dashboard that shows daily active users, grouped by region, with rolling averages.

Without aggregation, this logic would require multiple queries and application-side processing. With a well-designed pipeline, MongoDB can compute everything in one pass, returning exactly the shape the UI needs.

This reduces data transfer, simplifies code, and centralizes logic where it belongs.

Common Aggregation Pipeline Mistakes

Several mistakes appear repeatedly:

- Doing filtering after grouping

- Building monolithic pipelines instead of incremental ones

- Ignoring index usage

- Treating

$lookupas free

Each of these increases cost and complexity without adding value.

When the Aggregation Pipeline Is a Great Fit

MongoDB aggregation shines when:

- Data is document-oriented

- Transformations are complex

- Read performance matters

- You want to reduce application logic

When to Reconsider Aggregations

Aggregation may not be ideal when:

- Queries resemble complex relational joins

- Transactions across entities dominate

- Reporting requirements are extremely heavy

In those cases, a relational database or hybrid approach may be more appropriate.

Aggregation Pipelines in Larger Architectures

Aggregation pipelines often sit behind APIs that serve dashboards, reports, or internal tools. Designing them as stable, versioned components improves long-term maintainability.

If you are already structuring backend systems around clear responsibilities, ideas from API gateway patterns for SaaS applications apply just as much to data access layers.

Conclusion

The MongoDB aggregation pipeline is not just a query feature—it is a data processing framework. When you learn to think in stages, pipelines become easier to write, easier to optimize, and easier to trust.

A practical next step is to take one existing reporting query and rewrite it as a pipeline. Break it into stages, inspect performance, and iterate. Mastery of the aggregation pipeline comes from understanding flow, not memorizing operators.