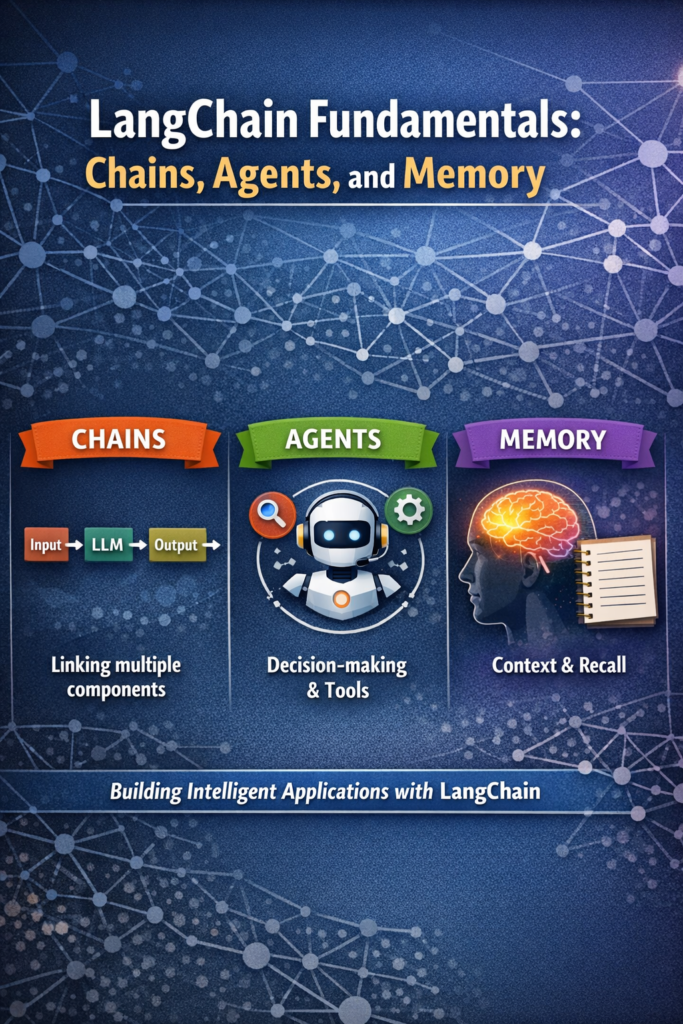

LangChain is often introduced as a convenience library for LLM apps. In practice, it is an architectural toolkit. Teams that treat it as a thin wrapper around prompts usually hit complexity walls quickly. This article on LangChain fundamentals explains how chains, agents, and memory fit together, when each abstraction helps, and where it can hurt if misused.

If you already know how to call an LLM API but want to design reliable AI features that evolve beyond demos, this guide focuses on decisions you will need to make early.

How LangChain Fits Into Modern AI App Architecture

LangChain sits between your application code and LLM providers. It standardizes prompt execution, tool orchestration, retrieval, and state handling. That role is similar to how backend frameworks structure HTTP or messaging workflows.

If you are coming from API-heavy systems, the same separation-of-concerns mindset discussed in API gateway patterns for SaaS applications applies directly here.

For reference, the official project overview is documented in the LangChain documentation, which defines these abstractions at a conceptual level.

Chains: Deterministic LLM Pipelines

Chains are the simplest LangChain abstraction. A chain is a predefined sequence of steps where inputs flow through prompts, models, and optional transformations to produce outputs.

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

from langchain.chains import LLMChain

prompt = PromptTemplate(

input_variables=["question"],

template="Explain this concept clearly: {question}"

)

llm = OpenAI(temperature=0)

chain = LLMChain(llm=llm, prompt=prompt)

result = chain.run("dependency injection")

When Chains Are the Right Tool

Chains work best when the workflow is predictable. For example, summarization, classification, or structured extraction often fit cleanly into a chain. Because execution is deterministic, chains are easier to test and reason about.

This mirrors classic backend pipelines, where predictable flows outperform dynamic logic. If this sounds familiar, it aligns closely with ideas in clean architecture in Flutter, even though the domain is different.

Common Chain Pitfalls

A frequent mistake is overloading a single chain with too many responsibilities. Long chains become fragile, especially when intermediate outputs are not validated. In production systems, multiple small chains composed together are usually more reliable.

Agents: Dynamic Reasoning and Tool Selection

Agents extend chains by adding decision-making. Instead of following a fixed sequence, an agent decides which tool or step to execute next based on the current state.

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

tools = [

Tool(

name="Search",

func=search_function,

description="Searches documentation"

)

]

llm = OpenAI(temperature=0)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description")

response = agent.run("Find best practices for API rate limiting")

Why Agents Are Powerful and Risky

Agents enable flexible workflows, such as multi-step reasoning or tool-based automation. However, that flexibility introduces unpredictability. Execution paths can vary, which complicates testing and cost control.

This trade-off is similar to choosing event-driven systems over synchronous flows, a topic explored in event-driven microservices with Kafka.

Real-World Scenario: Agent Overreach

In a mid-sized SaaS application, teams often start with an agent to “handle everything.” Over time, the agent accumulates tools for billing, support, analytics, and reporting. Debugging becomes difficult because behavior depends on model decisions.

A common corrective pattern is to reserve agents for orchestration only, while keeping critical business logic in deterministic services.

Memory: Managing Context Over Time

Memory in LangChain controls what information persists across interactions. Without memory, every LLM call is stateless. With memory, conversations and workflows can build context gradually.

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

from langchain.llms import OpenAI

memory = ConversationBufferMemory()

conversation = ConversationChain(

llm=OpenAI(),

memory=memory

)

conversation.predict(input="Explain OAuth2 simply")

conversation.predict(input="Now compare it to JWT")

Types of Memory and Their Trade-Offs

Simple buffer memory is easy to use but grows unbounded. Summary-based memory reduces token usage but risks losing nuance. Vector-based memory allows retrieval but adds infrastructure complexity.

These decisions resemble state persistence strategies discussed in multi-tenant SaaS app design, where uncontrolled growth quickly becomes a scalability issue.

Memory Is Not a Database

A common misconception is treating memory as long-term storage. In practice, memory should hold context, not truth. Authoritative data should always come from your backend or retrieval layer.

Combining Chains, Agents, and Memory

Most production LangChain systems follow a layered approach:

- Chains handle predictable transformations

- Agents orchestrate tools and decisions

- Memory provides short- or mid-term context

This structure closely resembles layered application design. If you have worked with clean architecture patterns, the parallels are intentional.

Testing and Observability

Testing chains is straightforward because inputs map directly to outputs.

By contrast, agents are harder to test due to their non-deterministic execution paths. In practice, teams test agents by validating tool calls rather than text output.

For broader testing strategies, concepts from unit testing with Jest and Vitest in modern JS projects translate well to LLM pipelines.

Cost, Reliability, and Safety Considerations

LangChain simplifies orchestration but does not eliminate operational concerns.

- Limit memory growth

- Validate all tool inputs

- Set strict token budgets

- Log intermediate steps for debugging

These safeguards align with general API protection strategies outlined in API rate limiting 101.

When to Use LangChain Fundamentals

- When workflows require multiple LLM steps

- When tools must be selected dynamically

- When conversational context matters

- When rapid iteration outweighs strict determinism

When NOT to Use LangChain

- When a single prompt is sufficient

- When deterministic behavior is mandatory

- When infrastructure simplicity is critical

- When costs must be tightly bounded

Common Mistakes

- Using agents for simple pipelines

- Letting memory grow unchecked

- Embedding business logic in prompts

- Skipping validation of tool outputs

- Treating LangChain as a framework replacement

Conclusion and Next Steps

LangChain is most effective when its abstractions are used intentionally. Chains provide structure, agents provide flexibility, and memory provides context. When these roles are clearly separated, systems remain understandable and scalable.

As a next step, review one AI feature in your product and map which parts should be chains, which require agents, and what context truly needs memory. That exercise alone often prevents months of refactoring later.